Diabetes Control in China: Building a Supervised Machine Learning Diabetes Predictor based on living circumstances of Chinese citizen aged 45 and above

Diabetes Control in China: Building a Supervised Machine Learning Diabetes Predictor based on living circumstances of Chinese citizen aged 45 and above

Sajal Kabiraj ,Nico Kling2, Md Nur Alam Siddik3 /Volume 13/ Issue 2/ (April-September 2020)

ABSTRACT

Diabetes continues to be a leading public health challenge in China, especially when taking the ageing society into consideration. In this research paper, we explore how a simple supervised machine learning model build in Python, based on the China Health and Retirement Longitudinal Study (CHARLS), can predict the risk of a Chinese citizen aged 45 and above having diabetes/high blood sugar. Three different algorithms, Random Forest, Support Vector Machine and Logistic Regression are compared. The respective modelsare built with the help of scikit-learn and imbalanced-learn libraries. Synthetic Minority Oversampling Technique is used on the training to tackle imbalance. Findings show that Support Vector Machine is the most suited to predict diabetes in this study, but also that predictors that focus on fasting glucose level wield better results. The scientists concluded that more research in the field of machine learning in diabetes control is necessary, especially for the elderly.

Keywords: Diabetes, Supervised Machine Learning, China Health and Retirement Longitudinal Study, Health Care Management

1.0 INTRODUCTION

China has an ageing population, with an estimated average age of 50 by 2070, and a steadily increasing elderly dependency (Statista, 2019). Adding to that, the People´s Republic of China faces some healthcare problems, such as the rapid prevalence of diabetes (Xu et al., 2013). This is relevant as diabetes and other chronic diseases increase the risk of disability or even premature death, if not treated properly and are thus leading to a burden on both the economy, as well as the society of the People´s Republic of China (Soumya & Srilatha, 2011; Papatheodorou et al., 2015; Nather et al., 2008). In medicine, diabetes is diagnosed through

fasting blood glucose, glucose tolerance and random blood glucose levels (Iancu et al., 2008; Cox and Edelmann, 2009; American Diabetes Association, 2012). Recent studies showed that Machine Learning can be used to make a preliminary judgment about diabetes through their daily physical examination data, as well as being a reference for doctors (Lee and Kim, 2016; Alghamdi et al., 2017; Kavakiotis et al., 2017). Numerous different algorithms were used in recent research papers to predict diabetes. Zou et al. (2018) used Neural Networks, Random Forests and Decision Trees, and found out that fasting glucose is the most important index for prediction.

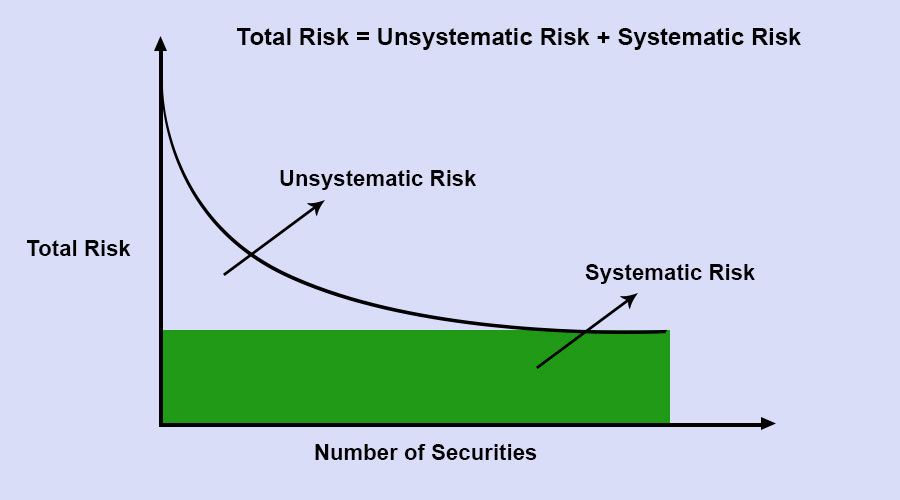

Kavakiotis et al. (2017) conducted a study to create a systematic review of machine learning, data mining techniques and tools in diabetes research. The result showed that 85 percent of the approaches were supervised ones, while only 15 percent were unsupervised. Support vector machines proved to be the most successful algorithms in diabetes prediction. While Georga et al. (2013) used support vector machines and focused on glucose, Ravazian et al. (2015) used logistic regression for different onset type 2 diabetes predictions. Duygu and Esin (2011) focused on dimension reduction and feature extraction through Linear Discriminant Analysis. Additionally, more studies emerge that use ensemble methods to improve accuracy (Kavakiotis et al., 2017). Nearly all recent papers focus on fasting glucose level as their main predictor. In order to gain new insight, the author of this thesis uses predictors such as demographic information or activity level to predict diabetes. The reason for that is while diabetes type 1 is mostly caused by genes and environmental factors such as viruses, the most common form of diabetes, diabetes type 2, is caused mostly by high-risk behaviours, such as smoking, alcohol consumption or poor diets, or environmental factors such as air pollution (Batis et al. 2014; Chan et al., 2009). The algorithms used for creating the prediction model are Random Forests, Linear Regression and Support Vector Machines. 2.0 MATERIAL AND METHODS The algorithms are chosen after discussing constraints such as processing power or the speed of prediction, as well as type of problem such as classification or regression. Random forests are, as ensembled decision trees, through their classification power a favoured algorithm used in medical machine learning. Linear regression is an algorithm used to find the relationship between variables and

forecasting. Additionally, as support vector machines are the most widely used algorithms in diabetes prediction, it is the final algorithm used. The foundation of a well-functioning machine learning model is the data that it uses. Therefore, the researcher used parts of the China Health and Retirement Longitudinal Study, in short CHARLS. It is a longitudinal survey of persons in China that are 45 years of age or older. Using CHARLS ensures that the research fits the needs of the aging Chinese society. The parts used for building the model in Python through Sci-Kit Learn were the health status and functioning section, as well as parts of the demographic section, and are from CHARLS 2015. The reason for choosing those specific sections is that they contain information about diabetes of the participants and living conditions of the participants, while also being of national representation. As the datasets dependent variable is heavily unbalanced, with less than 5 percent of the participants suffering from diabetes, the synthetic minority over- sampling technique is used on the training set. Diabetes is used as the binary predictor, and the evaluation of the different models is done through a comparison of accuracy, precision and recall scores, as well as the F1-score. 3.0 RESULTS Machine learning in research seeks to provide knowledge to computers through data, observations and interacting with the world, in order to acquire knowledge to generalize new settings (Bengo, 2019). If the data in machine learning process is labelled, meaning it has attached metadata which provides information about the initial data, one speaks of supervised machine learning.

- Understanding the Problem

To understand the problem, the data scientist must understand what kind of data he/she has at hand, either labelled or unlabelled, to categorize the problem into supervised or unsupervised. In the process of supervised machine learning, is there are two problem categories: regression problems, which are solved by creating predictions on a continuous scale, and categorization problems, which are solved by predicting categories. Regression results fit the data, while classification results divide it. Categorization problems can furthermore be divided into binary classification, a classification with only two classes, and multi-class classification, classification with more than two classes.

- Identification of required data

Once the problem is understood, the data that is required for solving the problem must be identified. Preferably, an expert suggests the attributes and features that are important. If that is not the case, brute- forcing, meaning simply measuring all data available, can be used. However, datasets that are collected by brute-forcing require significant pre-processing, as it often contains missing feature values or distortion in data, called noise (Zhang et al., 2002).

Data pre-processing

When trying to analyse data, data pre- processing is an important step, as for example some classification techniques, such as Logistic Regression, are highly vulnerable to missing data, noise in the data set or outliers. The process generally consists of data cleaning and/or data imputation and feature reduction. The basic principle of data cleaning is to analyse the reason for so-called dirty data, and to propose cleaning rules in order to improve the quality of data. An example for dirty data are, depending on the circumstance, NULL values. They signify missing or unknown values.

Three main types of missing data exist: Missing completely at random (MCAR), missing at random (MAR) and not missing at random (NMAR). MCAR data is not related to other values or the missingness of the hypothetical value, in other words, the missingness is not systematic. MAR data has a systematic relationship between observed data and the propensity of missing values, but not the missing data, e.g. if men are more likely to tell their weight then woman, weight data would be MAR. MNAR data is data with a relationship between the propensity of a missing value and its hypothetical value, e.g. when sick people drop out of a longitudinal study (Graham, 2009). When faced with them, the data scientist must understand why the data is missing, in order to proceed with either deletion or imputation through various means (Batista &Monard, 2003). In order to increase the operation time and efficiency of supervised machine learning models, feature selection is used. It is the process of first identifying and then removing irrelevant and redundant variables. (Yu & Liu, 2004).

Definition of the training set

When the data set was cleaned, a training set needs to be deployed. In a supervised classification problem, training data is the split of the initial set of information used to learn the rules of assigning the different instances to the different groups. The idea of using training data in machine learning is while being simple, the very foundation of the learning process. There are two competing concerns when it comes to choosing the correct split: with less training data and more testing data, parameter estimates have greater variance. On the other hand, with more training data and less testing data, there will be greater variance. In the end, the split depends on the total amount of instances. Another concern specifically in classification problems are imbalanced

training sets, which have a different number of data points available for the different classes and are therefore not equally presented, which leads to faulty learning and data understanding (Japkowicz & Stephen, 2002).

- Training, evaluation of results with the test set and parameter tuning

When evaluating the results of the training, a test set is deployed. It is the other split of the initial data set to access the use of the classification model. When evaluating the accuracy of a machine learning model for classification, there are three different metrics to evaluate. First, there is the accuracy, which is the ratio of correctly predicted observations to the total observations. The second is called precision and is the ratio of correctly predicted positive observations to the total predicted positive observation. The recall is the ratio of correctly predicted positive observations to all observations in the actual class. If the received metrics are low, parameter tuning can be done, which again depends on the different used algorithms. Once better results are achieved, the model can be deployed.

- Logistic Regression

Logistic regression is based on the idea of finding the relationship of a feature, and the probability of a particular outcome. Its origin is the sigmoid function and its output is a probability that a given input belongs to a certain class. Therefore, the output always lies between 0 and 1. The algorithm learns through maximum likelihood estimation (Pant, 2019).

- Support Vector Machines

Support Vector Machines (SVM) are based on the idea of a margin – either side of a hyperplane which separates two classes. When the margin is maximized, the largest possible distance between hyperplane and instance is created, which reduces the expected generalization error. If data is linearly separable and an optimum

separating hyperplane has been found, the data points that lie on its margin are called support vectors, and the results are a linear combination of those points (Suykens & Vandewalle) 1999). In classification, the hyperplane finds an optimum hyperplane, or margin maximizing hyperplane, which best separates the features into different domains.

- Random Forest Random

forests are ensembles of many classification trees. The algorithm fits many classification trees into a data set and uses them to create predictions from all the trees. Instead of searching for the most important feature while splitting a node, it searches for the best feature among a random subset of features, hence it being random. In classification, a prediction is done by taking a majority vote for the predicted class (Breiman, 2001).

- Results of the different models

The Random Forest model produced a precision of 31,81 percent, an accuracy of 94,61 percent as well as a recall of 9,72 percent. Logistic Regression Model had a precision of 12,9 percent, an accuracy of 78,5 percent, and a recall of 59,7. Support Vector Machine has a precision score of 14,5 percent, a recall score of 58,3 percent and lastly an accuracy of 81,3 percent. The Support Vector Machine had the highest F1 score with 22,5 percent, followed by Logistic Regression with 20 percent. The Random Forest model had 14 percent. Therefore, the Support Vector Machine was the best model in this study for diabetes detection without glucose level as a predictor, with an accuracy of 81,3 percent, and a F1 score of 22,5 percent.

4.0 LIMITATIONS, DISCUSSIONS AND FUTURE RESULT DIRECTION

Other studies focused on fasting glucose levels to predict diabetes, although diabetes type 2 is mostly caused by living circumstances. The result of this study indicates that those are not as suitable for predicting diabetes as fasting glucose levels, which was expected, given most papers focus on fasting glucose levels. It needs to be mentioned that the reliability of found data is impacted by several factors. First of all, the database does not differentiate between Diabetes Type 1 or Diabetes Type 2. Adding to that, many of its variables contain NULL values, which is why the author used the assumption of not mentioning being sick means being healthy. Secondly, the database is quite small with only 20 000 cases. As precision and recall increase with the amount of data processed, the result of this study might be different than other studies on the same topic that have more data available Still, the outcome does not fit the theory that fasting glucose level are the only important predictor. Living circumstances are used in diabetes risk assessments, and rightfully so. The data might still be able to contribute to better results in diabetes prediction through machine learning when used together with the glucose levels. Further research in machine learning for sickness prediction and detection is needed, especially for the elderly. Additionally, the quality of databases needs to be increased, as low-quality databases are a big problem in research in general. Acknowledgment The researchers would like to thank the National School of Development at Peking University in Bejing, PR China for giving us access to the CHARLS database. http://charls.pku.edu.cn/en

REFERENCES

- Alghamdi M., Al-Mallah M., Keteyian S., Brawner C., Ehrman J., Sakr S. (2017).Predicting diabetes mellitus using SMOTE and ensemble machine learning approach: the henry ford exercise testing (FIT) project. PLoS One.12:e0179805. doi:10.1371/journal.pone.0179805.

- American Diabetes Association (2012). Diagnosis and classification of diabetes mellitus.Diabetes Care,35(1): 64–71. doi:10.2337/dc12-s064.

- Batis, C., Sotres-Alvarez, D., Gordon- Larsen, P., Mendez, M. A., Adair, L., &Popkin, B. (2014). Longitudinal analysis of dietary patterns in Chinese adults from 1991 to 2009. British Journal of Nutrition. 111(8): 1441-1451.

- Batista, G., &Monard, M.C. (2003). An Analysis of Four Missing Data Treatment Methods for Supervised Learning. Applied Artificial Intelligence. 17: 519-533. 5. Bengo, Y. (2019). The Rise of Neural Networks and Deep Learning in Our Everyday Lives – A Conversation with YoshuaBengio. Retrieved April 29, 2019, from https://emerj.com/ai-podcast- interviews/the-rise-of-neural-networks- and-deep-learning-in-our-everyday-lives- a-conversation-with-yoshua-bengio/

- Breiman, L. (2001). Random forests. Machine learning, 45(1): 5- 32.doi:10.1023/A:1010933404324.

- Chan, JC., Malik, V., Jia, W., et al. (2009) Diabetes in Asia: epidemiology, risk factors, and pathophysiology. JAMA. 301: 2129-2140.

- Cox M. E., Edelman D. (2009). Tests for screening and diagnosis of type 2 diabetes.Clin. Diabetes,27: 132–138. doi:10.2337/diaclin.27.4.132.

- Duyguç.,Esin D. (2011). An automatic diabetes diagnosis system based on LDA- wavelet support vector machine classifier.Expert Syst. Appl.38: 8311–8315.

- Georga E. I., Protopappas V. C., Ardigo D., Marina M., Zavaroni I., Polyzos D., et al. (2013). Multivariate prediction of subcutaneous glucose concentration in type 1 diabetes patients based on support vector regression.IEEE J. Biomed. Health Inform.17: 71–81. doi:10.1109/TITB.2012.2219876.

- Graham, J. W. (2009). Missing Data Analysis: Making it Work in the Real World. Annual Review of Psychology. Vol. 60:549-576.

- Lancu I., Mota M., Iancu E. (2008). Method for the analysing of blood glucose dynamics in diabetes mellitus patients.Proceedings of the 2008 IEEE International Conference on Automation, Quality and Testing, Robotics, Cluj- Napoca. doi: 10.1109/AQTR.2008.4588883

- Japkowicz N., Stephen, S. (2002). The Class Imbalance Problem: A Systematic Study. Intelligent Data Analysis. 6:429-449.

- Kavakiotis I., Tsave O., Salifoglou A., Maglaveras N., Vlahavas I., Chouvarda I. (2017). Machine learning and data mining methods in diabetes research.Comput. Struct. Biotechnol. J.15: 104–116. doi:10.1016/j.csbj.2016.12.005

- Lee B. J., Kim J. Y. (2016). Identification of type 2 diabetes risk factors using phenotypes consisting of anthropometry and triglycerides based on machine learning.IEEE J. Biomed. Health Inform.20: 39–46. doi:10.1109/JBHI.2015.2396520

- Nather A., Bee C.S., Huak C.Y., Chew J.L.L., Lin C.B., Neo S., Sim E.Y. (2008). Epidemiology of diabetic foot problems and predictive factors for limb loss. J. Diab. Complic., 22(2): 77-82. 17. Pant, A. (2019). Introduction to Logistic Regression. Retrieved April 29, 2019, from https://towardsdatascience.com/introduc tion-to-logistic-regression-66248243c148.

- Papatheodorou K., Banach M., Edmonds M., Papanas N., Papazoglou, D. (2015). Complications of diabetes. J. Diabetes Res.1-5.

- Razavian N., Blecker S., Schmidt A. M., Smith-McLallen A., Nigam S., Sontag D. (2015). Population-level prediction of type 2 diabetes from claims data and analysis of risk factors.Big Data.3: 277–287. doi:10.1089/big.2015.0020.

- Soumya D., Srilatha B. (2011). Late stage complications of diabetes and insulin resistance.J. Diabetes Metab. 2(167): 2-7. 21. Statista – The Statistics Portal. (2019). Children and old-age dependency ratio in China from 1990 to 2100. Retrieved March 13, 2019, from https://www.statista.com/statistics/2515 35/child-and-old-age-dependency-ratio- in-china/

- Suykens, J. A., & Vandewalle, J. (1999). Least squares support vector machine classifiers. Neural processing letters, 9(3), 293-300. doi:10.1023/A:1018628609742

- Xu, Y., Wang, L., He, J., Bi, Y., Li, M., Wang, T., et al. (2013) Prevalence and control of diabetes in Chinese adults. JAMA. 310:948–959.

- Yu, L., Liu, H. (2004), Efficient Feature Selection via Analysis of Relevance and Redundancy. JMLR, 5:1205-1224.

- Zhang, S., Zhang, C., Yang, Q. (2002). Data Preparation for Data Mining. Applied Artificial Intelligence. 17: 375 - 381.

- Zou, Q., Qu, K., Luo, Y., Yin, D., Ju, Y., & Tang, H. (2018). Predicting Diabetes Mellitus With Machine Learning Techniques. Frontiers in genetics.9: 515. doi:10.3389/fgene.2018.0051.